The undergraduate admissions process at elite universities, owing to its implications for socioeconomic mobility, is subjected to significant public scrutiny in the UK. Social commentators and politicians routinely call for 'scientific assessment' of existing admission methods (Hewstone 2013) while news media and thinktanks regularly publish reports documenting what appear superficially to be 'unfair' admission practices at selective universities.

It is generally accepted that access to elite universities should be based primarily on evidence of academic potential, purged of the contaminating effects of socioeconomic background. For example, Oxford claims to be " ... committed to recruiting the academically most able students, regardless of background", while Cambridge claims that its "aim is to offer admission to students of the greatest intellectual potential, irrespective of social, racial, religious and financial considerations". However, allegations of unfair admissions are usually based on differences in the percentage of successful applicants from different socio-demographic groups with no reference to the applicants’ future potential, e.g. their post-admission academic performance.

This is at odds with the economic view of 'fair treatment', based on productivity – an intellectual tradition going back at least to Becker (1971).1

Applied to the admissions context, this approach would require one to base the analysis of fairness on post-admission performance of admitted candidates. In particular, an academically fair, i.e. a purely meritocratic, admission process would set a common threshold for admissions such that candidates with predicted, future academic performance at or above the threshold are admitted and those below it are rejected. The ones whose expected performance exactly reaches the threshold (i.e. the ones expected to perform the worst among those admitted) may be regarded as the 'marginal' candidates. An academically fair admission process should equate the expected performance of the marginal admitted students – the admission threshold – across demographic and socio-economic groups. Indeed, if the admission threshold is higher for state-school pupils, then the marginal state-school candidate would be better than the marginal private-school one. Then there is likely to be another state-school applicant 'in between' the two, who was rejected but the university would be better off taking him/her instead of the worse private-school marginal candidate; this would contradict the notion of meritocratic admissions.

Unfortunately, it is difficult for a researcher to detect these underlying thresholds, based on typically available admissions data. This is because it is impossible to know who the marginal candidates are within each demographic group, when the decision to admit is based partially on factors unobserved by a researcher. Examples include the quality of reference from the applicant’s high school or the degree of intellectual enthusiasm conveyed by the applicant’s personal statement. Such characteristics are difficult to record in a way that future researchers can access without compromising confidentiality, unlike test scores which are both anonymous and easily retrievable. Importantly, the distributions of such unobservable characteristics are likely to differ across demographic and socioeconomic groups, jeopardising the researcher’s attempt at reconstructing an admission officer's perception regarding a candidate’s academic potential (c.f. Heckman 1998 on similar issues pertaining to labour-market hiring).

New evidence

In a recent research project (Bhattacharya et al. 2013) we attempt to construct a test of academically fair admissions in the face of such missing information. We apply our test to admissions data from a large undergraduate degree programme in one of the UK’s leading universities. The dataset is constructed by matching three years of applicant data matched with eventual performance in the first- and third-year examinations of the admitted students. These examinations are blindly marked and serve as our measure of future productivity.

The overall application success rates in this programme are about two percentage points lower for females and for applicants from state-run (as opposed to expensive independent) schools, suggesting a superficial bias against them. However, our analysis based on admission thresholds paints a very different picture.

In particular, instead of identifying the marginal candidates, we attempt to directly identify the difference in admission thresholds faced by applicants from different social or demographic groups. We get around the unobservable characteristics problem via an intuitive assumption that applicants who are better along every easily observable test score – such as percentage of A/A* grades in GCSE and scores on various components of the entrance test – are also statistically (but not certainly) more likely to appear better to admission tutors, based on characteristics observable by them but not by us. This assumption is shown to yield a lower bound on the differences in admission thresholds faced by different groups. If the lower bound on the threshold difference between say, men and women, is found to be positive, then one can conclude that males are facing a higher academic bar for gaining a place, contradicting the notion of strictly meritocratic admissions.

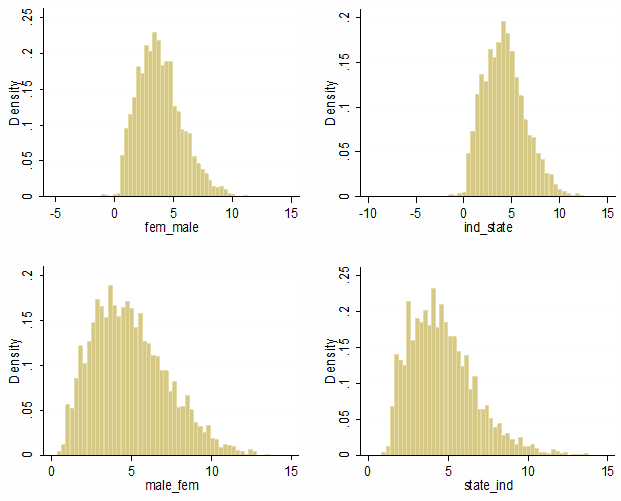

We first provide some suggestive evidence in support of our key identifying assumption. In particular, we show that candidates who are better in terms of GCSE and A-level performance and every component of the entrance test have statistically higher scores on the admission interview. The latter is the most 'subjective' of all the assessments and, arguably, the most similar in nature to characteristics most likely to remain unobserved to a researcher. Figure 1 shows the histograms of the difference in median interview scores across those pairs of applicants where the first dominates the second in terms of every observable test score (the top left figure, for instance, compares female-male pairs where the female applicant has scored higher in every test). Statistical dominance, as proposed in our assumption, would imply that these histograms should have strictly positive support, up to sampling error – which is clearly borne out by the graph.

Figure 1. Difference in median interview scores

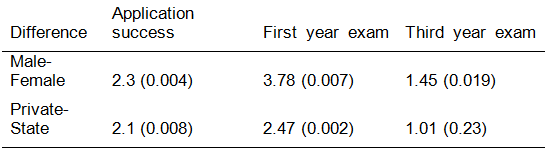

Next, applying our identification methodology to the admissions data, we find that the marginal female applicants perform, on average, at least 3.8 percentage points lower than the marginal male applicants in the first-year examinations and at least 1.4 percentage points lower in the final examinations (Table 1). These differences correspond to about 80% and 25% of a standard deviation, respectively of the overall score distributions. In contrast, applicants from private schools are held to a slightly higher bar of expected future performance (about 2.5 percentage points higher in the first year and a statistically insignificant but numerically positive amount in the finals) than state-school applicants.

Table 1. Percentage-point differences in application success rates and admission-thresholds (p-values in parentheses)

These exact magnitudes are slightly variable across statistical specifications but three empirical findings are robust across all specifications:

- The gender gap is large, persistent and statistically significant in every case.

- The private-state school difference is comparatively much smaller.

- Significant 'catch-up' occurs between the first and the third year.

Presumably, some of this catch-up occurs by way of actual improvement and some of it occurs via efficient sorting of students into optional papers in the third year, in contrast to the first year when everyone sits the same examination.

Author's note: The opinions expressed in this article are solely those of the author and do not represent the views of either the University of Oxford or St Hilda’s College.

References

Anwar, S & H. Fang (2011), "Testing for the role of prejudice in emergency departments using bounceback rates", NBER Working Paper 16888.

Beck, T and P Behr, Andreas Madestam (2012), "Sex and credit: Is there a gender bias in lending?", VoxEU.org, 16 September .

Becker, G (1971), The Economics of Discrimination, University of Chicago Press.

Bhattacharya D, S Kanaya and M Stevens (2013), "Are University Admissions Academically Fair?" University of Oxford Working Paper, June.

Booth A, P Nolenand L Cardona Sosa (2012), "Why aren’t there more women in top jobs?" VoxEU.org 20 December.

Chandra, A and D Staiger (2009), "Identifying provider prejudice in medical care", Mimeo, Harvard University and Dartmouth College.

Grosvold J, S Pavelin and I Tonks (2012), "Gender diversity on company boards", VoxEU.org, 27 March.

Heckman, J (1998), "Detecting discrimination", Journal of Economic Perspectives, 12-2, 101-116.

Hewstone, M (2013), "Selection Mechanism", Times Higher Education, 3 January.

Paserman, D (2007), "Gender-linked performance differences in competitive environments: Evidence from pro tennis", VoxEU.org, 26 June.

Tavares, J and T Cavalcanti (2007), "Gender discrimination lowers output per capita", VoxEU.org, 26 October.

1 Becker’s insights have been applied to the analysis of gender gaps in wages and recruitment (c.f. Paserman 2007, Tavares and Cavalcanti 2007, Grosvold et al. 2012, and Booth et al 2012), approval of loan applications and healthcare provision (c.f., Beck et al 2012, Chandra and Staiger 2009, and Anwar and Fang 2011).