Children in many extremely poor, remote regions are growing up illiterate and innumerate despite high reported school enrolment ratios (Pritchett 2013, Glewwe and Muralidharan 2016). This phenomenon of ‘schooling without learning’ has many alleged sources, such as insufficient demand, inadequate schooling materials, and a lack of qualified, motivated teachers. These factors are resulting in a substantial part of developing countries’ populations being illiterate and innumerate. The consequences of this phenomenon are dire: for these groups, lower lifetime incomes are expected as a result, and less opportunity to succeed in the growing worlds around them; and for the rest of the world, greater socioeconomic inequality.

The limitations of previous solutions

This problem is no secret. In the last few decades, several hundred programme evaluations have been conducted to assess different proposed methods to raise learning levels in developing countries. A series of systematic reviews and meta-analyses – notably Kremer and Holla (2009), McEwan (2015), Evans and Popova (2016), Ganimian and Murnane (2016), and Glewwe and Muralidharan (2016) – have summarised and aggregated these studies. When successful, interventions generally yield small to moderate learning gains. A popular metric to measure learning gains is test score standard deviations. Using this metric, successful interventions generally yield a 0.1-0.3 standard deviation gain in learning (Evans and Yuan 2020).

Such gains substantially fall short of addressing the desperately low learning levels in many parts of the developing world. As Pritchett (2013) illustrates, it would take dozens or hundreds of such interventions to address the inequality in learning between poor developing countries and their richer counterparts, and even more to address the gap between developing countries and countries in the OECD. In pockets of extreme poverty, where the baseline condition is functional illiteracy, the need for dramatic intervention is even larger.

The promise of bundled interventions

Recently, interest has grown among economists in the potential for ‘bundled’ interventions to generate transformative change. Advocates for this brand of intervention explain that, in cases of extreme need, interventions with multiple complementary facets have the potential to improve outcomes far more than the sum of the estimated efficacy of their constituent parts. The most famous of these is Banerjee et al. (2015), which reports the results of a six-country study of such a bundled intervention aiming to improve the livelihoods of the very poor. The intervention combined a large asset transfer with training, coaching, and other support. The study found that this intervention led to large sustained gains in income, consistent with an ‘escape’ from extreme poverty.

Evaluating a bundled education intervention in rural Guinea Bissau

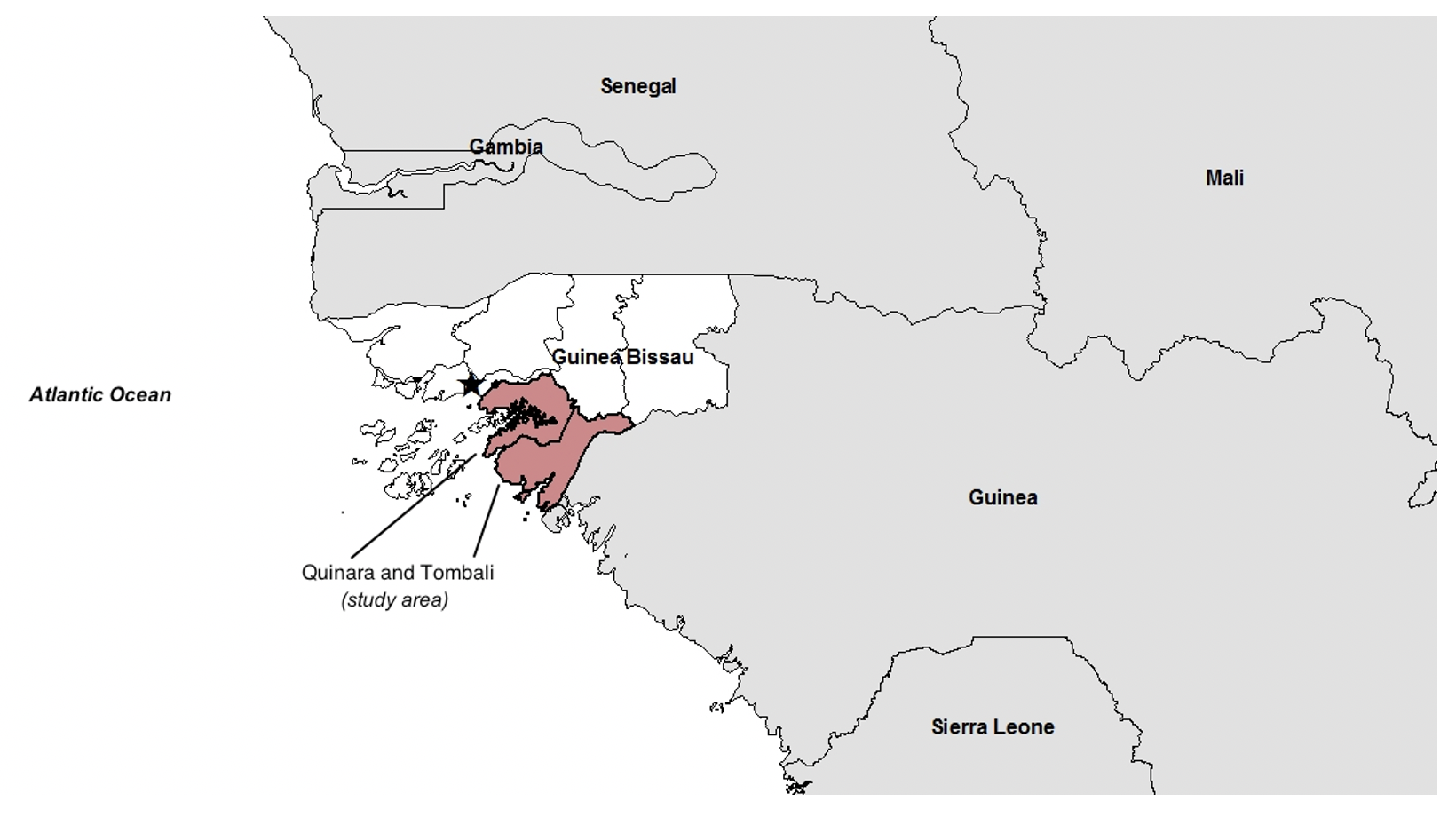

In a recent paper (Fazzio et al. 2020), we report the results of a study designed to assess whether bundled interventions in education can have similarly transformative effects on the learning of primary-aged children in pockets of extreme poverty. We conducted the study in rural areas of Guinea Bissau, one of the poorest countries on the planet and one in which the vast majority of the children are growing up illiterate and innumerate (Boone et al. 2014). We show our study area in Figure 1.

Figure 1 Study area

The intervention provided four years of school. First, a year of pre-primary school focusing on Portuguese language acquisition, then grades one-three of the national primary education curriculum, meant to take the place of official instruction usually delivered by Guinea Bissau’s government educational system in these years. Our intervention involved several components, including:

- Hiring trained teachers from the capital to live in remote villages and teach children in their home village

- Training these teachers to deliver a bespoke curriculum focused on skills acquisition and child participation

- Monitoring teachers regularly with a focus on improving teacher practice, and providing at least 18 days of in-class observation per year, with feedback for each teacher

- Providing adequate resources in terms of salaries, teaching materials, and other inputs to ensure a high level of fidelity for implementation.

Implementing this intervention turned out to be intensely challenging. We chose to work in small, isolated villages and the rugged terrain, long distances between villages, and poor state of the roads between them made frequent, spontaneous monitoring difficult, particularly during the rainy season when some villages become inaccessible. These villages lacked internet connection and reading materials and had few or no literate residents who might reinforce child learning. This also made it difficult to recruit qualified teachers, who were required to reside in the village. Finally, none of the parents enumerated were native speakers of Portuguese, the official language of the curriculum and of the intervention. This restricted children’s ability to practice and apply the lessons from class outside of school. Nonetheless, the team administering this intervention was able to implement the intervention at a high level of fidelity. Their tireless work ensured that all lessons were completed in a given year and that each school progressed according to the planned curricular strategy.

We found transformative learning gains

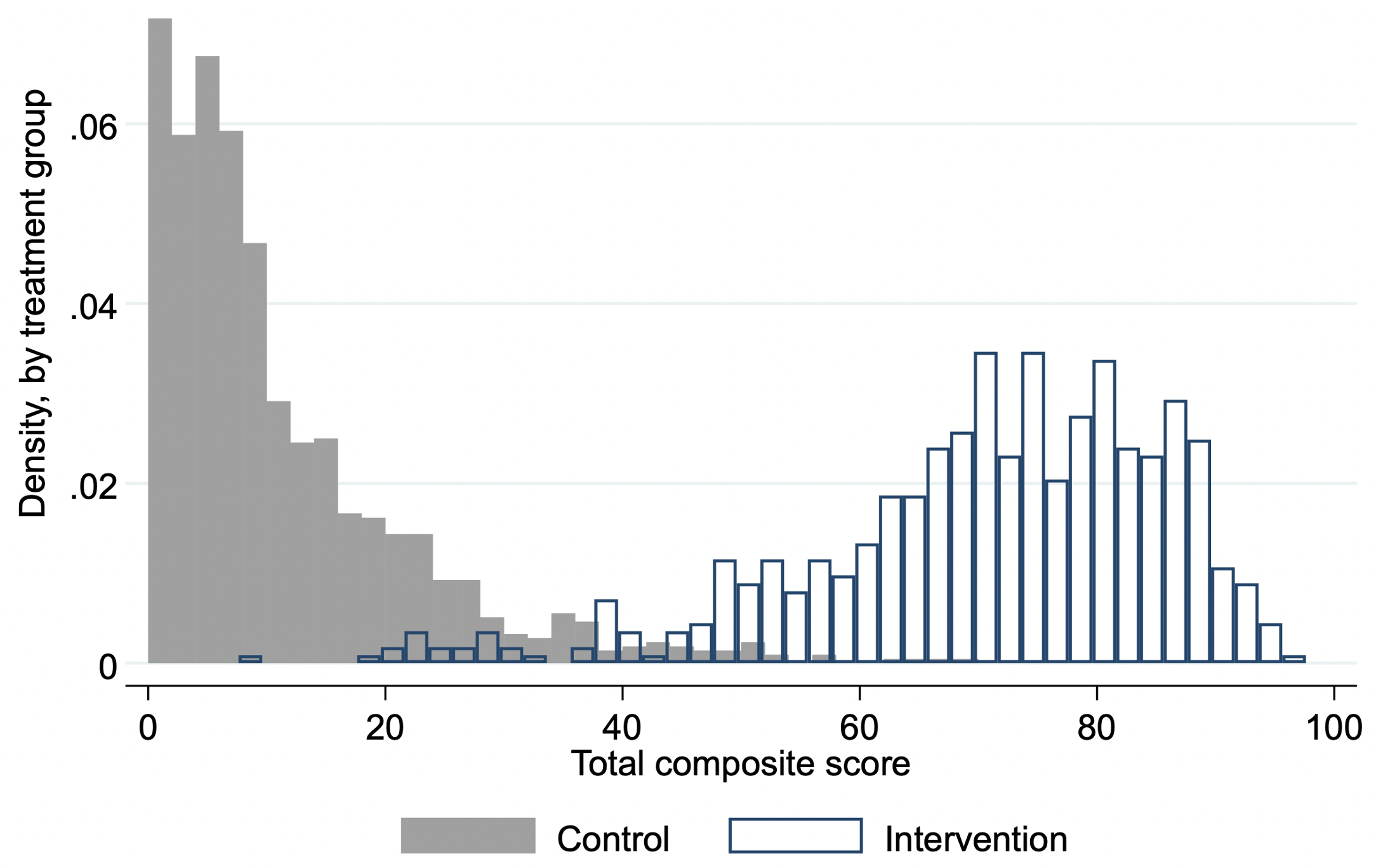

At the end of four years, a team of enumerators returned to all villages to administer face-to-face tests of children’s reading and math ability. What they found was astonishing. Children who had been receiving the intervention scored more than 59 percentage points better than children who had not. On average, ‘intervention children’ answered 70.5% of the test questions correctly, whereas ‘control children’ answered only 11.2% correctly. In Figure 2, we show the distribution of these scores.

Figure 2 Distribution of test scores

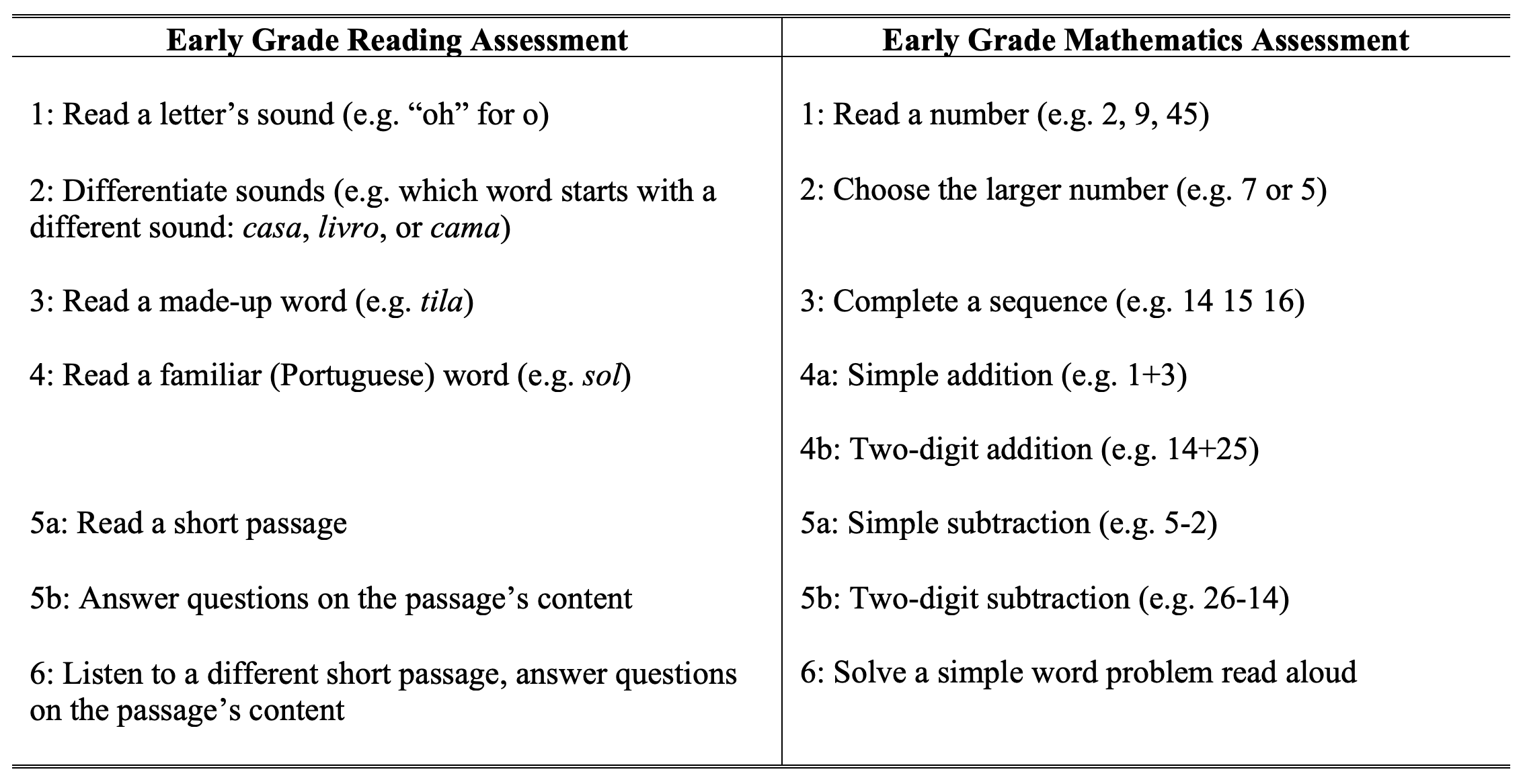

We used ‘Early Grade Reading Assessment-style’ and ‘Early Grade Mathematics Assessment-style’ tests to assess learning levels. These tests are administered one-on-one, orally, instead of using a written test paper, and are commonly used in contexts like rural Guinea Bissau, where learning levels are very low (Platas et al. 2014, Dubeck and Gove 2015). They are comprised of various tasks, as shown in Table 1.

Table 1 Tasks/competencies assessed in the reading and math tests

Another way of measuring learning levels in these tests is to look at the extensive margin instead of the intensive one. In other words, measuring the proportion of children who scored zero on a given task. In Figures 3 and 4, we do just this, showing the proportion of control and intervention children with zero scores in reading and maths tasks, respectively.

Figure 3 Zero-scores for reading tasks

Figure 4 Zero-scores for maths tasks

These figures show that the majority of control children could not answer even one higher-level task successfully in reading or maths (with the exception of maths subtask six, which was a maths problem read aloud to the child). These children were already at least ten years old and, if prior data serve as a guide, were likely to soon leave school if they had not done so already. We conclude from these results that the counterfactual condition for children in this area is a lifetime of functional illiteracy and innumeracy.

Another commonly used measure of learning is the oral reading fluency (ORF) of children. The oral reading fluency of children randomised to receive the intervention was 75 correct words per minute. This compares favourably with the oral reading fluency measured in a 2014 national assessment of third grade students in the Philippines, a country with a per-capita GDP nearly an order of magnitude larger than that of Guinea Bissau.1 It is also much higher than oral reading fluency measures from other African countries who have used similar tests. In short, the learning gains from the intervention endowed these children with literacy and numeracy skills far superior to those in neighbouring, more rapidly developing countries, and these skills are comparable to even those in a much more prosperous lower-middle-income country.

What this means for the world

The main message we draw from these results is that we may need to think bigger when it comes to addressing the very low levels of learning in this and similar contexts. This work, in conjunction with Eble et al. (forthcoming), shows that the upper bound on the magnitude of intervention-driven learning gains in such deprived areas is much larger than previously thought. While our implementation of these interventions was quite expensive – costing more than $400 per child per year – the learning gains are truly transformative. Our cost benefit analysis suggests that the intervention is highly resource efficient. Furthermore, other work from West Africa suggests that such learning gains may have spillovers across families, villages, and generations which we will need more time to be able to capture (Wantchekon et al. 2015). The devil, of course, is in the details. Future work must address how his type of gain can be realised, either within the government system or in cooperation with it. Nonetheless, our work shows that, with sufficient resources and will, literacy and numeracy can be achieved even in the world’s most deprived areas.

References

Banerjee, A, E Duflo, N Goldberg, D Karlan, R Osei, W Parienté, J Shapiro, B Thuysbaert and C Udry (2015), “A Multifaceted Program Causes Lasting Progress for the Very Poor: Evidence from Six Countries”, Science 348 (6236).

Boone, P, I Fazzio, K Jandhyala, C Jayanty, G Jayanty, S Johnson, V Ramachandran, F Silva and Z Zhan (2014), “The Surprisingly Dire Situation of Children’s Education in Rural West Africa: Results from the CREO Study in Guinea-Bissau (Comprehensive Review of Education Outcomes)”, in Edwards, S, S Johnson and D N Weil (eds) African Successes, Volume II: Human Capital, Chicago, IL: University of Chicago Press.

Dubeck, M M and A Gove (2015), “The Early Grade Reading Assessment (EGRA): Its Theoretical Foundation, Purpose, and Limitations”, International Journal of Educational Development 40: 315-322.

Eble, A, C Frost, A Camara, B Bouy, M Bah, M Sivaraman and J Hsieh (2021) “How Much Can We Remedy Very Low Learning Levels in Rural Parts of Low-Income Countries? Impact and Generalizability of a Multi-Pronged Para-Teacher Intervention from a Cluster-Randomized Trial in The Gambia”, Journal of Development Economics 148: 102539, forthcoming.

Evans, D K and A Popova (2016), “What Really Works to Improve Learning in Developing Countries? An Analysis of Divergent Findings in Systematic Reviews”, The World Bank Research Observer 31(2): 242-270.

Evans, D K and F Yuan (2020), “How Big Are Effect Sizes in International Education Studies?”, Center for Global Development, Working Paper 545.

Fazzio, I, A Eble, R L Lumsdaine, P Boone, B Bouy, P-T J Hsieh, C Jayanty, S Johnson and A F Silva (2020), “Large Learning Gains in Pockets of Extreme Poverty: Experimental Evidence from Guinea Bissau,” NBER Working Paper 27799.

Ganimian, A and R Murnane (2016), “Improving Education in Developing Countries: Lessons From Rigorous Impact Evaluations”, Review of Educational Research 86 (3): 719-755.

Glewwe, P and K Muralidharan (2016), “Improving Education Outcomes in Developing Countries: Evidence, Knowledge Gaps, and Policy Implications”, in Hanushek, E A, S Machin and S Woessmann (eds) Handbook of the Economics of Education 5: 653-743. Amsterdam, NL: Elsevier.

Kremer, M and A Holla (2009), “Improving Education in the Developing World: What Have We Learned from Randomized Evaluations?”, Annual Review of Economics 1(1): 513-542.

McEwan, P J (2015), “Improving Learning in Primary Schools of Developing Countries: A Meta-Analysis of Randomized Experiments”, Review of Educational Research 85(3): 353-394.

Platas, L M, L Ketterlin-Gellar, A Brombacher and Y Sitabkhan (2014), “Early Grade Mathematics Assessment (EGMA) Toolkit”, RTI International, Research Triangle Park, NC.

Pritchett, L (2013), The Rebirth of Education: Schooling Ain’t Learning, Center for Global Development Books.

Wantchekon, L, M Klašnja and N Novta (2015), “Education and Human Capital Externalities: Evidence from Colonial Benin”, The Quarterly Journal of Economics 130(2): 703-757.

Endnotes

1 Accessed from https://earlygradereadingbarometer.org/overview on October 28, 2019.