In order to inform policymaking, we need answers to both causal and predictive questions. Kleinberg et al. (2015) provide an example that helps us draw a distinction between the two. A policymaker taking action against a drought might need to know whether a rain dance will produce the intended effects. This is a purely causal question. But other rain-related decisions, such as whether to distribute umbrellas, only require a good prediction for the likelihood of bad weather, because the gain of holding an umbrella is known, at least with some degree of approximation. This is what Kleinberg et al. (2015) call a ‘prediction policy problem’.

Most of the policy evaluation literature has focused on causal questions. The ‘credibility revolution’ in econometrics (Angrist and Pischke 2010) has provided us with tools for the ex post estimation of the causal effects of public programmes. Nevertheless, the impact of a policy also depends on how effective it is in selecting its targets. Predicting which target may garner a larger return can be crucial when resources are scarce. This is where machine learning comes in handy. Machine learning algorithms developed in statistics and computer science (Hastie et al. 2009) have proven particularly powerful for predictive tasks (Mullainathan and Spiess 2017).1

We present two examples of how to employ machine learning to target those groups that could plausibly gain more from the policy.2 The examples illustrate the benefits of machine-learning targeting when compared to the standard practice of implementing coarse assignment rules based on arbitrarily chosen observable characteristics. Only ex post evaluation methods, however, will eventually tell us whether targeting-on-prediction delivers the intended gains. The column also discusses some of the issues — transparency, manipulation, etc. — that arise when machine learning algorithms are used for public policy.

Examples of machine learning targeting

In Andini et al. (2018a), we consider a tax rebate scheme introduced in Italy in 2014 with the purpose of boosting household consumption. The Italian government opted for a coarse targeting rule and provided the rebate only to employees with annual income between €8,145 and €26,000. Given the policy objective, an alternative could have been to target consumption-constrained households, who are supposed to consume more out of the bonus.

Surveys often contain self-reported measures that can be used as proxies of consumption constraints. We use the 2010 and 2012 waves of the Bank of Italy’s Survey on Household Income and Wealth (SHIW), which asked households about their ability to make ends meet. We employ off-the-shelf machine learning methods to predict this condition based on a large set of variables that could be potentially used for targeting. Our preferred algorithm is the decision tree, which leads to the assignment mechanism shown in Figure 1. It selects only a few variables, essentially referring to household income and wealth. For instance, those households that have financial assets below €13,255 and household disposable income lower than €36,040 are predicted to be consumption-constrained. The other groups that are predicted to be so can be easily assessed by looking at the figure.

Figure 1 Decision-tree prediction model for consumption-constrained status

Even if we have reasons to believe that households predicted to be consumption-constrained are more likely to consume out of the rebate, nothing ensures that this is the case (Athey 2017). To lessen these concerns, we separately estimate, on 2014 SHIW data, the effect of the bonus for households predicted to be consumption-constrained and for those that are not. To do this, we go back to standard econometrics, assuming selection on observables and running regressions.

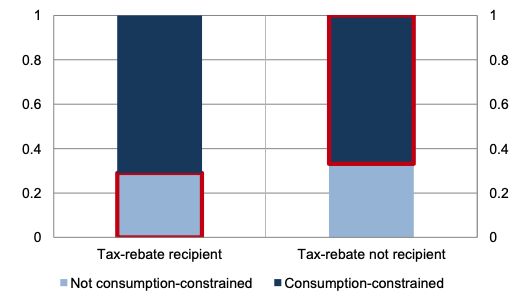

The estimated effect is positive and significant only for the predicted consumption-constrained households. The distribution of tax-rebate recipients suggests that great gains could be achieved by using machine learning for targeting (Figure 2, bordered in red): around one-third of the actual beneficiaries are predicted to be not consumption-constrained, while about two-thirds of non-recipients are predicted to be constrained.

Figure 2 Fraction of predicted consumption-constrained by tax-rebate recipient status

In the second application (Andini et al. 2018b), we focus on the ‘prediction policy problem’ of assigning public credit guarantees to firms. These schemes aim at supporting firms’ access to bank credit by providing publicly funded collateral. In principle, guarantee schemes should target firms that are both creditworthy — to ensure financialsustainability of the scheme — and rationed — because other firms would likely receive credit anyway (World Bank 2015). In practice, existing guarantee schemes are usually based on naïve rules that exclude borrowers with low creditworthiness. We propose an alternative assignment mechanism based on machine-learning predictions, in which both creditworthiness and credit rationing are explicitly addressed.

The investigation refers to Italy, where a public Guarantee Fund has been in operation since 2000. Using micro-level data from the credit register, which includes measures for both credit rationing3 and the insurgence of bad loans, we identify the hypothetical target firms and estimate a machine-learning model to predict this status. In this case, our favouritemachine-learning algorithm is the random forest, which is essentially the average of many decision trees.

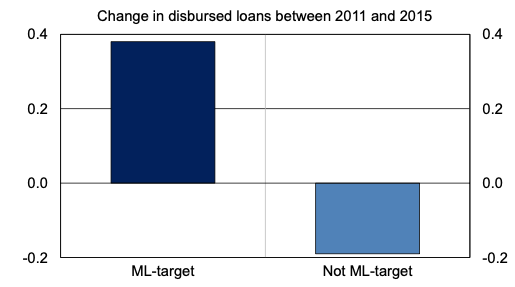

To assess whether targeting these firms will improve the scheme’s impact on credit availability, we first consider the set of firms that received the guarantee and split them intotwo groups, based on whether machine learning predicts them to be a target or not. A simple comparison of the growth rate of disbursed bank loans in the years following the provision of the guarantee shows that the machine-learning-targeted group performed better (Figure 3). The result is confirmed by applying a more rigorous regression discontinuity design (as in de Blasio et al. 2018), which shows a larger impact of the guarantee on credit availability for the machine-learning-target group.

Figure 3 Performance of Guarantee Fund beneficiary firms by machine learning-target status

Issues with machine learning targeting

Applying machine-learning methods to policy targeting requires dealing with some controversial topics. One concern arises with the interpretability of the decision rule, which may come at the expense of precision. In some applications, simple algorithms are sufficiently accurate, as with the decision tree in the tax rebate example. In other cases, the preferable machine learning targeting rule is like a black box, as the above-mentioned random forest for the public guarantee scheme. The lack of interpretability of black-box rules can be seen as a threat to transparency, because excluded agents may not easily understand on which grounds they have not been targeted (Athey 2017). The trade-off between precision and transparency depends on the specific application.

However, there is a dimension of transparency, which may be referred to as substantive transparency, that concerns the accountability of the policymaker to accomplish her mission, i.e. using public money in an effective way. In this respect, machine-learning targeting clearly trumps coarse assignment rules.

Another concern is that machine learning-based targeting rules are built by looking at a specific payoff (e.g. improve firm access to credit). To reach this goal, the targeting rule selects specific groups (the targets) and not others. This selection may hurt other policy goals (‘omitted payoffs’; see Kleinberg et al. 2018). For instance, elaborating on the Guarantee Fund example, the public guarantee was also strongly advocated as a counter-measure for the recession. One may wonder whether our machine-learning targeting rule ends up excluding firms located in southern regions, which were more strongly hit by the crisis. This is not the case: the machine learning-based rule prioritises the South, while the Guarantee Fund’s actual rule favours the North.

The last concern is that, when a targeting rule is known, agents may manipulate their observable characteristics to benefit from the policy. This is, however, true for any kind of targeting. Comparing, for instance, the decision tree with the actual rule for the tax rebate, one could see that, in order to get the bonus, the potential recipient should manipulate multiple variables (say, both financial assets and income in the simplest case), while the actual measure is only based on income.

Authors’ note: The views expressed here are those of the authors and do not necessarily reflect those of the Bank of Italy.

References

Angrist, JD, and JS Pischke (2010), “The credibility revolution in empirical economics: How better research design is taking the con out of econometrics“, Journal of Economic Perspectives 24(2): 3–30.

Andini, M, E Ciani, G de Blasio, A D’Ignazio and V Salvestrini (2018a), “Targeting with machine learning: An application to a tax rebate program in Italy”, Journal of Economic Behavior and Organization, forthcoming.

Andini, M, M Boldrini, E Ciani, G de Blasio, A D’Ignazio and A Paladini (2018b), “Machine learning in the service of policy targeting: The case of public credit guarantees“, Bank of Italy Working Paper, forthcoming.

Athey, S (2017), “Beyond prediction: Using big data for policy problems“, Science 355(6324): 483–485.

Athey, S, and G Imbens (2016), “Recursive partitioning for heterogeneous causal effects“, PNAS 113(27): 7353–7360.

Chalfin, A, O Danieli, A Hillis, Z Jelveh, M Luca, J Ludwig and S Mullainathan (2016), “Productivity and selection of human capital with machine learning“, American Economic Review 106(5): 124–127.

de Blasio, G, S De Mitri, A D’Ignazio, P Finaldi Russo and L Stoppani (2018), “Public guarantees to SME borrowing. A RDDevaluation”, Journal of Banking and Finance 96: 73–86.

Hastie, T, R Tibshirani and J Friedman (2009), The elements of statistical learning: Data mining, inference, and prediction, New York: Springer.

Jiménez, G, S Ongena, JL Peydrò and J Saurina (2012), “Credit supply and monetary policy: Identifying the bank balance-sheet channel with loan applications”, American Economic Review 102(5): 2301–2326.

Kang, JS, P Kuznetsova, M Luca and Y Choi (2013), “Where not to eat? Improving public policy by predicting hygiene inspections using online reviews”, Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, 1443–1448.

Kleinberg, J, J Ludwig, S Mullainathan and Z Obermeyer (2015), “Prediction policy problems”, American Economic Review 105(5): 491–495.

Kleinberg, J, H Lakkaraju, J Leskovec and S Mullainathan (2018), “Human decisions and machine predictions”, The Quarterly Journal of Economics 133(1): 237–293.

McBride, L, and A Nichols (2015) “Improved poverty targeting through machine learning: An application to the USAID poverty assessment tools”, working paper.

Mullainathan, S, and J Spiess (2017), “Machine learning: An applied econometric approach”, Journal of Economic Perspectives 31(2): 87–106.

Rockoff, JE, BA Jacob, TJ Kane and DO Staiger (2011), “Can you recognize an effective teacher when you recruit one?”, Education Finance Policy 6(1): 43–74.

Wager, S, and S Athey (2018), “Estimation and inference of heterogeneous treatment effects using random forests”, Journal of the American Statistical Association 113(523): 1228–1242.

World Bank (2015), “Principles for public credit guarantee schemes for SMEs”.

[1] We refer here to supervised learning methods. Other examples about the use of machine learning to assist public decision making can be found in Rockoff et al. (2011), Kang et al. (2013), McBride and Nichols (2015), Chalfin et al. (2016), and Kleinberg et al. (2018).

[2] In our applications, we exploit the fact that we know which group we would like to target and, at the same time, we have data where we observe this condition, so that we can use machine learning to estimate a prediction model. This might not always be the case. Furthermore, a policymaker might want to directly base the targeting on ex post evidence about causal effects, without any a priori. Athey and Imbens (2016) as well as Wager and Athey (2018) have developed machine-learning methods to identify the population groups where the causal effects are stronger.

[3] Credit rationing is assessed by looking at the credit dynamics for firms that have applied for bank loans (similarly to Jiménez et al. 2012).