Car reviewers tend to be flown to far-off destinations where they “get into a new model, eat three stars, sleep five stars”, all paid for by the manufacturer (Van Putten 2020) (with Tesla reportedly being an exception; see Hogan 2020). Movie critics do their work while enjoying free meals and hotel accommodation in garden spots like Hawaii (Ravid et al. 2006).

In short, whenever expert reviewers conduct their work non-blind, they may be exposed to the tactics producers use to get a favourable review. Producers have a strong incentive to capture expert reviewers as their reviews are hugely influential (e.g. Ashenfelter and Jones 2013, Reinstein and Snyder 2005), next to other signals of product quality such as (online) word-of-mouth (Johnston et al. 2019). Falling for such tactics benefits the reviewer, is unlikely to be detected, and may be regarded as a harmless perk that comes with the job.

We know next to nothing about the prevalence and consequences of cosy relationships between reviewers and producers. The only exception is empirical work into readily observable, more ‘formal’, possible sources of bias such as advertising of producers in the outlet that publishes the review (Reuter and Zitzewitz 2004) and patterns of ownership between outlets and producers (DellaVigna and Hermle 2017). Given a lack of awareness about less easily observable, informal relationships, many expert product reviews may be more biased than consumers realise.

A non-blind test

In a new paper. we present a textbook case of bias in a major expert review in the food-service industry (Vollaard and Van Ours 2021). Products and services in this industry tend to have experience attributes, making an expert review particularly important for consumers. This also holds for the review under study.

Every year for 36 years, reviewers assessed the quality of herring served at a great number of fish shops and fish stands in the Netherlands. According to local tradition, herring is lightly salted and frozen for preservation but otherwise raw when served. The fishmonger’s handling of the herring is of the essence as the product goes bad very quickly, which can lead to serious food poisoning.

The test received major press coverage and had reputational consequences for the businesses involved. The test panel visited a varying sample of about 150 fishmongers every year. It took the panel about two weeks each year to complete their tour. The panel rated the fish quality on a scale of 0 to 10, which allowed for easy comparisons across businesses.

We happened to know of a conflict of interest of one of the reviewers: the reviewer was affiliated with a specific supplier of herring, which we refer to as supplier A. We can detect the presence of a bias towards businesses stocked by supplier A because we have data on all the items that matter for the reviewers’ ratings.

Our data relate to the final two years the test was conducted – 2016 and 2017. For each of the 292 reviewed businesses, an exhaustive list was published of the product attributes that went into the assessment by the reviewers. We scraped the data from the publishing outlet’s web pages. We also tracked down which businesses were stocked by supplier A at the time of the test. These data were double-checked with wholesalers as well as fishmongers.

If the overall rating is higher for businesses stocked by supplier A than can be explained by these product attributes, then we interpret this as evidence of bias. Our identifying assumption is that the reviewers’ assessment of quality is fully reflected in the ratings and verbal judgements of individual attributes.

Biased ratings

Once it was clear which businesses were stocked by supplier A, a striking pattern emerged. On average, fishmongers stocked by supplier A received a final rating of 8.2; the other businesses, a meagre 5.5. The average ranking for the two groups differed by almost 50 places. Not surprisingly, the top ten featured a disproportionate share of businesses stocked by supplier A. Ending up in the top ten made all the difference: these businesses received a lot of attention in the media, which had a large positive effect on sales.

The difference in ratings may also reflect differences in the quality of the herring served. To detect a bias, we need to fully adjust for any differences in quality. To that end, we create a homogenous sample of businesses. All the businesses in this sample received the same provisional rating. This provisional rating was established at the point of sale and left some discretion to the panel (later adjustments to the rating such as those based on microbiological condition were mechanical).

Within this homogeneous sample, all herring was freshly prepared and excellently cleaned, had an average degree of ripening, and obtained the maximum score on the verbal judgement of taste, smell, visual appearance, and texture.

In the absence of bias within this homogeneous sample of businesses, we should not see systematically higher ratings for fishmongers stocked by the herring supplier to which the reviewer was affiliated. That is not what we find.

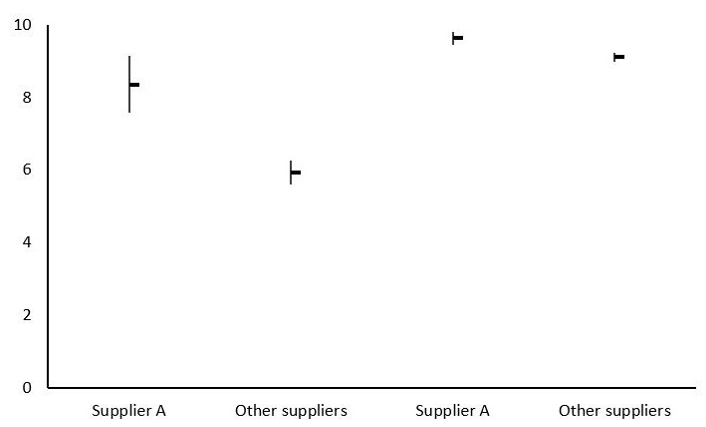

As illustrated in Figure 1, the ratings for the two groups diverge, and the difference is statistically significant. The gap in ratings amounts to about half a point, which translates into eight places in the ranking in the test. That may seem small, but given the fierce competition for a ranking in the top 10, it made a big difference.

Figure 1 Difference in provisional rating in full sample (left) and homogeneous sample (right)

Notes: Number of observations: 292 (full sample), 31 (homogenous sample). Rating gap homogenous sample does not reflect possible bias in assigning quality attributes such as quality of cleaning.

To investigate the sensitivity of our findings with respect to the coding of the verbal judgement of taste, smell, visual appearance, and texture of the herring, we asked four individuals with a taste for herring to rate the quality of the herring based on the verbal judgement.

Their coding turned out to be similar but differed in some individual cases. As shown in Table 1, the resulting homogenous samples also show a substantial gap in ratings between business stocked and not stocked by supplier A. The difference in rating is 0.5 to 0.6; the difference in ranking, 9 to 12 places. Clearly, the gap we find is not an artefact of the way we coded the panel’s comments that were put in natural language.

Table 1 Provisional rating and ranking of homogeneous samples

Notes: Size of homogeneous sample N that is homogeneous on all factors that go into the provisional rating differs between the authors and the four reviewers because of differences in coding of verbal judgement. All gaps in ratings are statistically significant at the 1% level. The ratings and ranking do not reflect possible bias in assigning quality attributes such as quality of cleaning.

As a further test, we estimate a linear model in which the ratings of the complete sample of businesses are related to all the product features that went into the provisional rating. The model explains more than 90% of the variation in ratings. Moreover, the estimated coefficients are in line with what is known about the way the panel of experts went about their business.

Again, we find that the businesses stocked by supplier A received higher ratings than can be accounted for by the exhaustive list of product attributes. This finding of a gap in ratings is robust to a range of alternative model specifications, including a fully non-parametric specification.

It should be noted that our results may only reflect a part of the bias that resulted from the conflict of interest. We took the assessments of individual product attributes that factor in the overall rating as a given. Whether the reviewers misrepresented how well businesses did on an individual attribute such as quality of cleaning or whether they tipped off fishmongers stocked by supplier A about their upcoming visit is something that we could not establish ex post. We also left aside the issue that the reviewers may have had personal preferences about the product that were not shared by all consumers (Li 2017).

Conclusions

Based on unique data in combination with knowledge of an expert reviewer’s conflict of interest, we were able to show that businesses were not judged by the same rules. A reviewer’s conflict of interest that may be regarded as ‘coming with the job’ turned out to be anything but harmless. Our findings confirm earlier doubts about this particular test voiced by one of the authors as well as disgruntled fishmongers that led to the demise of the test after 36 years.

The setting we studied may be unique in that it provided an opportunity to conduct such an empirical analysis – including complete data for an exhaustive list of product attributes and a decent sample size – but we have no reason to believe that our setting is unique in the sense of how cosy relationships may corrupt non-blind expert product reviews.

Biases in expert opinions easily arise, whether conscious or unconscious (Goldin and Rouse 2000). If such reviews are not bound by very strict rules such as those set by Consumer Reports (2020), consumers are advised not to put too much weight on what experts weigh in on what products they should and should not buy.

References

Ashenfelter, O, and G V Jones (2013), “The demand for expert opinion: Bordeaux wine”, Journal of Wine Economics 8(3): 285–93.

Consumer Reports (2020), “Food testing”, web page.

DellaVigna, S, and J Hermle (2017), “Does conflict of interest lead to biased coverage? Evidence from movie reviews”, The Review of Economic Studies 84(4): 1510–50.

Goldin, C, and C Rouse (2000), “Orchestrating impartiality: The impact of blind auditions on female musicians”, American Economic Review 90: 715–41.

Hogan, M (2020), “Here’s why you probably won’t see any more Tesla reviews in car magazines”, Road & Track, 6 October.

Johnston, D, T Kuchler, J Stroebel and A Wong (2019), “Peer effects in product adoption”, VoxEU.org, 18 September.

Li, D (2017), “Expertise versus bias in evaluation: evidence from the NIH”, American Economic Journal: Applied Economics 2(2): 60–92.

Reinstein, D A, and C M Snyder (2005), “The influence of expert reviews on consumer demand for experience goods: A case study of movie critics”, Journal of Industrial Economics 53(1): 27–51.

Reuter, J, and E Zitzewitz (2004), “Do ads influence editors? Advertising and bias in the financial media”, Quarterly Journal of Economics 121: 197–227.

Van Putten, B (2020), “Deze auto moet Elon Musk bashen, dat lukt aardig”, NRC Handelsblad (Dutch newspaper), 2 September.

Vollaard, B, and J C van Ours (2021) “Bias in expert product reviews”, CEPR Discussion Paper DP16147.