There is growing concern that funding agencies that support scientific research are increasingly risk-averse and that their competitive selection procedures encourage relatively safe and exploitative projects at the expense of novel projects exploring untested approaches (Kolata 2009, Alberts 2010, Walsh 2013). At the same time, funding agencies increasingly rely on bibliometric indicators to aid in decision making and performance evaluation (Hicks et al. 2015).

Research underpinning scientific breakthroughs is often driven by taking a novel approach, which has a higher potential for major impact but also a higher risk of failure. It may also take longer for novel research to have a major impact, because of resistance from incumbent scientific paradigms or because of the longer time-frame required to incorporate the findings of novel research into follow-on research. The ‘high risk/high gain’ nature of novel research makes it particularly appropriate for public support. If novel research, however, has an impact profile distinct from non-novel research, the use of bibliometric indicators may bias funding decisions against novelty.

In a new paper, we examine the complex relationship between novelty and impact, using the life-time citation trajectories of 1,056,936 research articles published in 2001 across all disciplines in the Web of Science (WoS) (Wang et al. 2016).

Measuring the novelty of scientific publications

To assess the novelty of a research article, we examine the extent to which it makes novel combinations of prior knowledge components. This combinatorial view of novelty has been embraced by scholars to measure the novelty of research proposals, publications, and patents (Fleming 2001, Uzzi et al. 2013, Boudreau et al. forthcoming). Following this combinatorial novelty perspective, we examine whether an article makes new combinations of referenced journals that have never appeared in prior literature, taking into account the knowledge distance between the newly-combined journals based on their co-cited journal profiles (i.e. ‘common friends’). Technically, we measure the novelty of a paper as the number of new referenced journal pairs weighted by the cosine similarity between the newly-paired journals.

Applying this newly-minted indicator of novelty, we find that novel research is relatively rare: only 11% of all papers make new combinations of referenced journals. Furthermore, the degree of novelty is also highly skewed: more than half (55%) of novel papers have only one new journal pair, while only a few papers make more and more distant new combinations. To work with this skewedness, we classify papers into three categories: (i) non-novel, if a paper has no new journal combinations; (ii) moderately novel, if a paper makes new combinations but has a novelty score lower than the top 1% of its field; and (iii) highly novel, if a paper has a novelty score among the top 1% of its field.

‘High risk/high gain’ nature of novel research

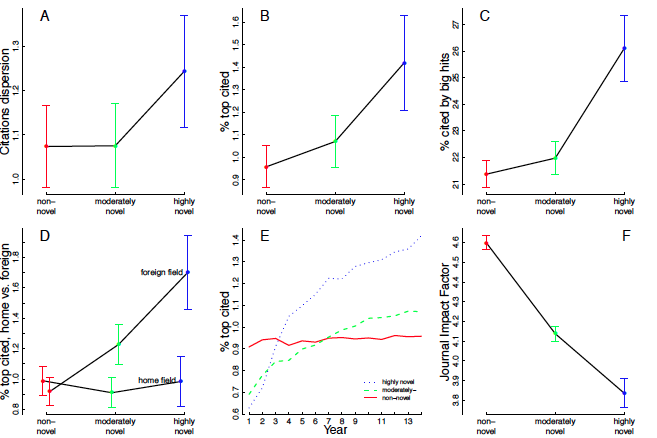

In view of the risky nature of novel research, we expect novel papers to have a higher variance in their citation performance. Following Fleming (2001) we use a Generalized Negative Binomial model to estimate the effects of novelty on both the mean and dispersion of received citations. We use a 14-year time window to count received citations for our set of WoS article published in 2001 and control for potential confounding factors. We find that the dispersion of the citation distribution is 15% higher for highly novel papers than non-novel papers (Figure 1A).

We also expect high-risk novel research to be more likely to become ‘big hits’, i.e. to receive an exceptionally large number of citations, defined here as being top 1% highly cited among the articles in the same field and publication year. We find that the odds of a big hit are 40% higher for highly novel papers than comparable non-novel papers (Figure 1B).

Novel research is not only more likely to become a big hit itself, but is also more likely to stimulate follow-on research that generates major impact. We find that papers that cite novel papers are more likely to themselves receive more citations than papers citing non-novel papers. Likewise, the odds of being cited by big hits are 26% higher for highly novel papers than for comparable non-novel papers receiving the same number of citations (Figure 1C).

Figure 1. Impact profile of novel research

Notes: (A) Estimated dispersion of citations (14-year). (B) Estimated probability of being among the top 1% cited articles in the same WoS subject category and publication year, based on 14-year citations. (C) Estimated probability of being cited by big hits. (D) Estimated probability of being among the top 1% cited articles in the same WoS subject category and publication year, based on 14-year home and foreign field citations separately. (E) Estimated probability of big hits, using 14 consecutive time windows to dynamically identify big hits. As an example, big hits in year 3 are identified as the top 1% highly cited papers based on their cumulative citations in a 3-year time window, i.e., from 2001 to 2003. (F) Estimated Journal Impact Factor. All regression models control for field (i.e., WoS subject category) fixed effects, the number of references and authors, and whether internationally coauthored, (C) additionally controls for the number of citations. All estimated values are for an average paper (i.e., in the biggest WoS subject category, not internationally coauthored, and with all other covariates at their means) in different novel classes. The vertical bars represent the 95% confidence interval. Data consist of 1,056,936 WoS articles published in 2001 and are sourced from Thomson Reuters Web of Science Core Collection.

Cross disciplinary impact of novel research

Examining the disciplinary profile of impact reveals that, compared with a non-novel paper receiving the same number of citations, highly novel papers are cited by more fields and have a larger ratio of citations from other-than-own fields, suggesting a greater cross-disciplinary impact of novel research.

This raises the question of the extent to which the big gain profile of novel papers is driven by impact outside their own field. We find that novel papers are only highly cited in foreign fields but not in their home field (Figure 1D), calling to mind the passage from Luke 4:24: “Verily I say unto you, No prophet is accepted in his own country.”

Delayed recognition for novel research

We find that highly novel papers are less likely to be top cited when using citation time windows shorter than three years (Figure 1E). But from the fourth year after publication, highly novel papers are significantly more likely to be big hits, and their advantage over non-novel papers further increases over time. Moderately novel papers suffer even more from delayed recognition. They are less likely to be top cited when using citation windows shorter than five years and only have a significantly higher chance of being a big hit with windows of at least nine years.

Bibliometric bias against novelty

The finding of delayed recognition for novel research suggests that standard bibliometric indicators which use short citation time-windows (typically two or three years) are biased against novelty, since novel papers need a sufficiently long citation time window before reaching the status of being a big hit.

Citation counts are one way to assess the bibliometric footprint of a paper. Another way is to assess the Impact Factor of the journal in which the paper is published, where the Impact Factor is measured as the number of times an average article in a journal is cited. By way of example, the journal Science has an Impact Factor of 33.611; Nature has an Impact Factor of 41.456. Assessing the Impact Factor of the journal in which the paper is published, we find that of highly novel papers to be 18% lower than comparable non-novel papers (Figure 1F), suggesting that novel papers encounter obstacles in being accepted by journals holding central positions in science. The increased pressure journals are under to boost their Impact Factor (Martin 2016) and the fact that the journal Impact Factor is based on citations in the first two years after publication suggests that journals may strategically choose to not publish novel papers which are less likely to be highly cited in the short run.

Policy implications

Taken together, our results suggest that standard bibliometric measures are biased against novel research and thus may fail to identify papers and individuals doing novel research. This bias against novelty imperils scientific progress, because novel research, as we have shown, is much more likely to become a big hit in the long run, as well as to stimulate follow-up big hits.

The bias against novelty is of particular concern given the increased reliance funding agencies place on classic bibliometric indicators in making funding and evaluation decisions. In addition, the finding that novel papers are highly cited in foreign fields highlights the importance of avoiding a monodisciplinary approach in peer review. Peer review is widely implemented in science decision-making, and peer review which is bounded by disciplinary boarders may fail to recognise the full value of novel research which typically lies outside its own field.

If we want to encourage risk-taking, we need to be aware of the inherent bias in bibliometric measures against novel research. More importantly, we advocate, when bibliometric indicators are used as part of the evaluation process, a wider portfolio of indicators and of time windows beyond the standard window of two or three years.

References

Alberts B (2010) "Overbuilding Research Capacity". Science 329(5997): 1257-1257.

Boudreau KJ, Guinan EC, Lakhani KR, Riedl C (forthcoming) "Looking Across and Looking Beyond the Knowledge Frontier: Intellectual Distance, Novelty, and Resource Allocation in Science". Management Science.

Fleming L (2001) "Recombinant uncertainty in technological search". Management Science 47(1): 117-132.

Hicks D, Wouters P, Waltman L, de Rijcke S, Rafols I (2015) "The Leiden Manifesto for research metrics". Nature 520: 429-431.

Kolata G (2009, June 27, 2009) "Grant System Leads Cancer Researchers to Play It Safe". New York Times.

Martin BR (2016) "Editors’ JIF-boosting stratagems – Which are appropriate and which not?" Research Policy 45(1): 1-7.

Uzzi B, Mukherjee S, Stringer M, Jones B (2013) "Atypical combinations and scientific impact". Science 342(6157): 468-472.

Walsh D (2013) "Not Safe for Funding: The N.S.F. and the Economics of Science". The New Yorker.

Wang J, Veugelers R, Stephan P (2016) "Bias against novelty in science: A cautionary tale for users of bibliometric indicators". CEPR Discussion Paper No. DP11228.