Financial risk models are critical for the operation of financial institutions and financial regulations. Surprisingly, however, little is known about their accuracy.

Generative artificial intelligence (AI) models, such as chatGPT, are designed to always provide answers, even if they know little or nothing about what is being asked, a phenomenon known as AI hallucination (Taliaferro 2023). For AI hallucinations to matter, they need to sound credible to the human user.

What about more traditional risk models? Do they also hallucinate, and if so, are the risk forecasts seen as credible?

How risk models are created

When we see a number expressing financial risk, such as volatility, tail risk, credit risk, or systemic risk, it is not the result of a measurement, such as temperature or price, but rather the output of a model. This is what I called a Riskometer in a column from 2009 (Danielsson 2009). The reason is that risk is a latent variable that can only be inferred from historical price swings instead of being measured like temperature or prices. Even forward-looking risk metrics conveying market sentiment, such as VIX and credit default swap (CDS) spreads, are not much different because similar models inform them.

Consequently, we cannot get an unambiguous measurement of risk. Instead, there are infinite alternative risk numbers for the same outcome to choose from because there is an infinite number of models one could create to estimate risk. We cope by selecting risk techniques via consensus mechanisms that prefer simple models informed by readily available data.

Ultimately, the question of model choice boils down to the words of George Box: "All models are wrong; some are useful," suggesting we should undertake some analysis of risk model accuracy. Every person who learns statistics is taught from the very beginning about the importance of confidence bounds. Why not include confidence intervals for risk forecasts? It isn't that simple. We can use standard techniques, but only if we assume the model is true, which, of course, is never the case. As Danielsson and Zhou (2015) argue in a VoxEU column titled "Why risk is hard to measure," it is not easy to evaluate the reliability of risk forecasts.

What risk models actually measure

As it turns out, the risk forecasts aren't all that accurate.

While the financial markets generate an enormous amount of data, petabytes daily, most of that is simply the high-frequency evolution of asset prices. A common method for obtaining risk forecasts is to feed relatively high-frequency data, such as daily prices, into a statistical model designed to represent the stochastic process of prices.

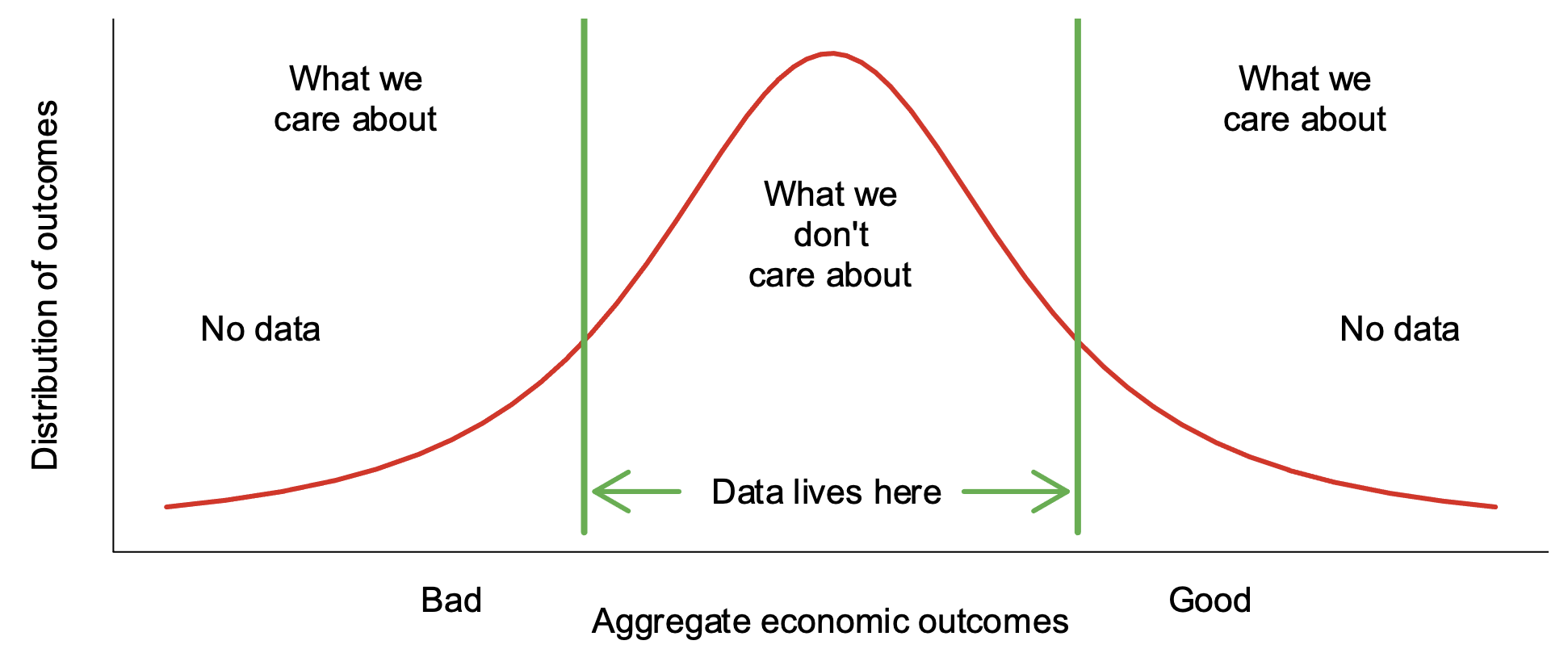

Figure 1 shows what actually happens.

Figure 1 Data generation and distribution of outcomes

The data used to estimate the model are the day-to-day fluctuations in the middle of the distribution, but those day-to-day events are usually not that important. What matters is the tails, the left for large losses and crises and the right for profit and economic growth. But that is not where data lives.

When the models hallucinate

Suppose we ask the risk models to provide an analysis of what happens less frequently. Perhaps a once-in-a-decade stock market crash, a financial catastrophe, a bank failure, or the possibility our pension will not be as good as advertised.

That analysis comes from the left tail of the distribution shown in Figure 1.

However, there is little to no information about such tail events. To accurately assess the risk of a once-in-ten-year stock market meltdown, we require ten-year stock return data. Because the minimum sample size is in the hundreds, we would require millennia of data. The reason is that when calculating the risk of something, we need a sufficiently large sample of tail events, much greater than the sample size required for the analysis of more common events.

That is not possible because we do not have the data, so in practice, we use high-frequency observations from the middle of the distribution to first estimate the stochastic process governing the data and then use that to calculate the risk of the extreme events we are concerned about.

That is very easy to do. We only need to input a probability into the model, and the corresponding event appears. We capture once-a-month events, as well as those that happen yearly, over a century, and even once per millennium.

Unfortunately, because the algorithm was not trained on data drawn from such dramatic events, it simply makes up the numbers. The model is hallucinating. Generative AI does so because they are trained to answer however badly supported that answer is. Statistical models are just the same.

Endogenous and exogenous risk, and the ‘one day out of a thousand’ problem

Financial risk can be divided into two categories: exogenous and endogenous, according to Danielsson and Shin (2003) and Danielsson et al. (2009).

Exogenous risk is predicated on the idea that the likelihood of market outcomes comes from outside the financial system, similar to the possibility of an asteroid hitting Wall Street.

Endogenous risk assumes that interactions among its economic agents drive financial system outcomes.

Risk measurement is straightforward if risk is exogenous, as we only need historical prices to estimate the data generation process. Not surprisingly, almost every risk model in use today implicitly assumes risk is exogenous. The reason is that it is much easier to model exogenous risk, and such models are well-suited to regulatory and compliance processes.

That is usually not a major worry. The aggregate outcome of the decisions of a large number of heterogeneous market actors resembles random noise most of the time. Provided they have specific needs, circumstances, and obligations that evolve relatively independently, then their decisions will also be independent, and the aggregation of a large number of them will be almost unpredictable and, hence, indistinguishable from noise. Under these circumstances, risk can safely be assumed to be exogenous.

Unfortunately, this assumption fails at the worst possible time. Classifying extreme outcomes as random, as is required if we are to treat risk as exogenous, is wrong. Extreme outcomes are not random. Instead, they have logical explanations, and the risk of them happening can be assessed.

The explanation is related to the motivations of market participants. Profit is usually what matters most, perhaps 999 days out of a thousand. However, on that one last day, when great upheaval hits the system, and a crisis is on the horizon, survival, rather than profit, is what they care most about – the ‘one day out of a thousand’ problems.

When financial institutions prioritise survival, their behaviour rapidly shifts. They begin hoarding liquidity, choosing the most secure, liquid assets, such as central bank reserves, leading to bank runs, fire sales, credit crunches, and other undesirable behaviours associated with crises. There is nothing untoward about such behaviour, and it cannot be easily regulated.

The survival instinct is the strongest driver of financial turmoil and crises. That is precisely when endogenous risk becomes most relevant since it reflects the risk of market players' interactions, which no longer resemble random noise. Instead, market participants act a lot more harmoniously. Buying and selling the same assets at the same time.

The shift in behaviour from profit maximisation to survival causes a structural break in the stochastic process of financial market outcomes.

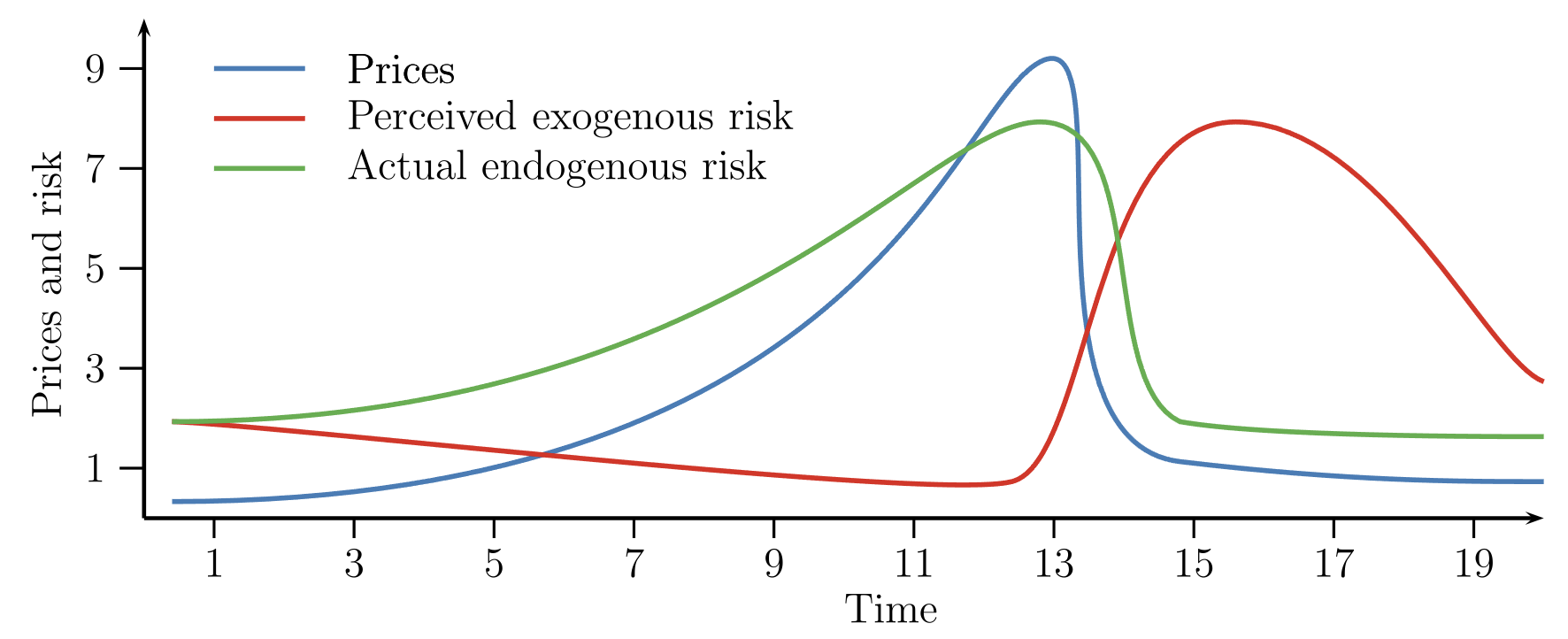

Figure 2 illustrates the problems of risk measurements across financial turmoil.

Figure 2 Endogenous versus exogenous risk

The blue line shows a typical price bubble. Prices increase at an ever-faster rate and then suddenly collapse --- Up the escalator, down the lift. The red line indicates perceptions of exogenous risk. It initially falls because volatilities typically drop as we go up price bubbles. However, the actual endogenous risk increases along with the bubble and falls with it.

How do we measure extreme risk?

We should not use a stochastic process estimated with day-to-day observations to produce predictions about more extreme events. All we end up with is risk model hallucinations.

The problem of extreme consequences must be addressed differently. Start by acknowledging that extreme events capture what happens when the convenient assumption of exogenous risk collapses. Any sensible analysis considers the underlying drivers of market stress: the survival instinct fuelled by leverage, liquidity, and asymmetric information.

When we examine how financial institutions behave during times of stress, we have ways to map the composition of their portfolios to their behaviour. When we aggregate this across the system, we can get a measurement of systemic financial risk and, more importantly, learn how to develop resilience. At least in theory.

There are two main reasons why that might not happen.

The first is that it is technically far more difficult. Instead of merely feeding past prices into an algorithm and asking it to generate risk for events we care about, we must delve deeply into the structure of financial institutions and the system to understand how they interact. Much harder to do.

Furthermore, such analysis is more subjective and difficult to formalise and, hence, not very conducive to either financial regulations or compliance.

There is a conflict between requiring rigorous analysis and just demanding a number. The second approach is easier because it is simpler to communicate, and the users do not need to understand how the numbers were obtained or what they mean. I think there is often a wilful desire not to know how risk numbers are made up, as that creates plausible deniability. It is much easier to get the job done if the numbers are seen as true.

Conclusion

The financial system creates a massive amount of data, which might suggest that it is relatively easy to estimate the distribution of market outcomes accurately. Then, we can safely ignore the impact of individual activities and treat the aggregate behaviour of all market players as random noise. In other words, if we assume risk is exogenous.

Unfortunately, that disregards the incentives of financial institutions, profit in normal times and survival during crises. Such a behavioural change causes structural breaks in the stochastic process of asset markets, which means that a model estimated in normal times is not very informative about stress outcomes.

It is simple to ask a model to generate probability estimates for any outcome we wish, even when the model was not trained on such data. However, that is just a risk model hallucination, even if many users take those numbers as credible. We get numbers that have a tenuous connection, at best, to reality, undermining the decisions taken on the basis of those numbers.

Risk model hallucination is especially significant in applications that deal with extremes, such as pension funds, reinsurance, sovereign wealth funds, and, most crucially, financial regulation.

References

Danielsson, J and C Zhou (2015), “Why risk is hard to measure”, VoxEU.org, 25 April.

Danielsson, J, H S Shin and J-P Zigrand (2009), “Modelling financial turmoil through endogenous risk”, VoxEU.org, 1 March.

Danielsson, J and H S Shin (2003), "Endogenous Risk", in Modern Risk Management: A History, Risk Books.

Danielsson, J (2009), "The myth of the riskometer”, VoxEU.org, 5 January.

Taliaferro, D (2023), “Constructing novel datasets with ChatGPT: Opportunities and limitations”, VoxEU.org, 15 June.